Blazing Fast Pairwise Cosine Similarity

I accidentally implemented the fastest pairwise cosine similarity function

While searching for a way to efficiently compute pairwise cosine similarity between vectors, I created a simple and efficient implementation using PyTorch. The function runs blazingly fast. It is faster than the popular cosine_similarity function from sklearn and the naive loop-based implementations.

1 | def pairwise_cosine_similarity(tensor: torch.Tensor) -> torch.Tensor: |

About cosine similarity

Cosine similarity is a intuitive metric to measure similarity between two vectors. It is widely used in vision, recommendation systems, search engines, and natural language processing. The cosine similarity between two vectors is defined as the cosine of the angle between them, ranging from -1 to 1, where 1 means the vectors are identical, -1 means they are opposite, and 0 means they are orthogonal.

Edit (March 2024): Be cautious about using cosine similarity when working with embeddings. Please check out Is Cosine-Similarity of Embeddings Really About

Similarity?

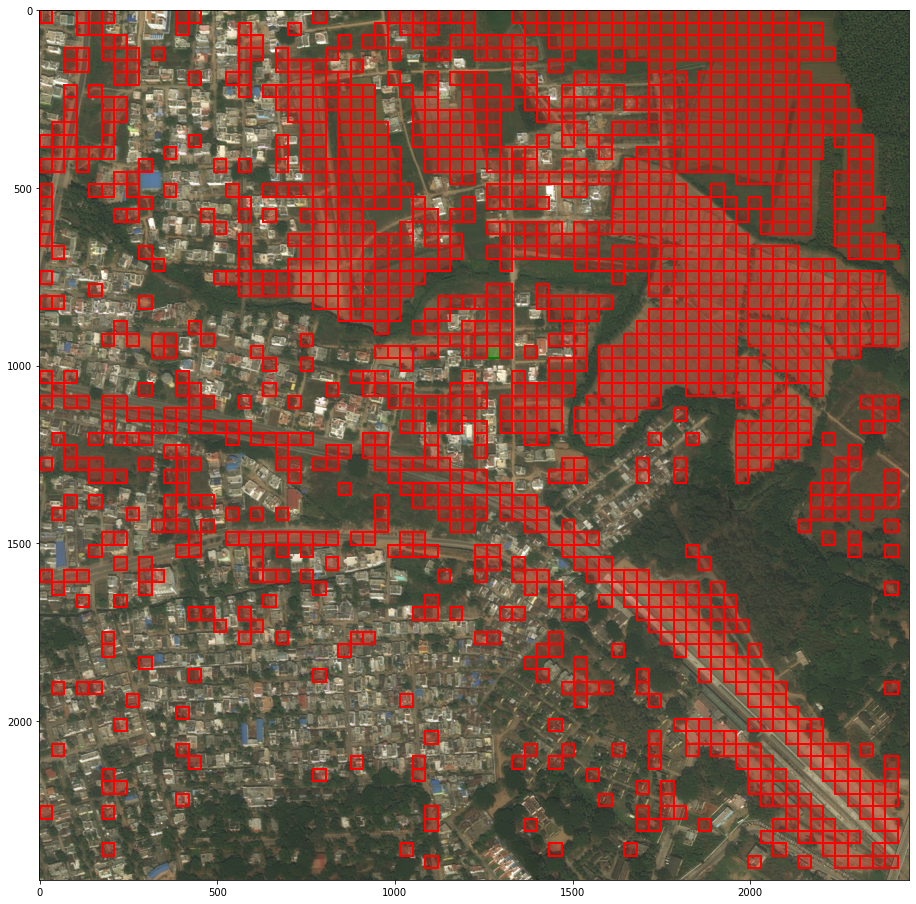

The use case

We usually want to compute the cosine similarity between all pairs of vectors. This enables do things like searching for similar vectors (similar images), analyzing the structure of the vector space (clustering images). However, with a large matrix, computing the cosine similarity can be computationally expensive.

We often find ourselves having a matrix of shape $(N, D)$ where $N$ is the number of vectors and $D$ is the dimensionality of the vectors. $D$ is also called the embedding size or dimension, which is typically a power of 2, e.g. 64, 512, 1024, 4096, etc.

The method

The method is based on the following formula:

$$\text{sim}(v_i, v_j) = \frac{v_i \cdot v_j}{\lVert v_i \rVert \lVert v_j \rVert}$$

Let’s explain what the code does:

1 | tmm = torch.mm(tensor, tensor.T) |

Numerator matrix: Dot product via matrix multiplication

tmm = torch.mm(tensor, tensor.T)This line computes the matrix multiplication of the input

tensorwith its transposetensor.T. The result is a tensortmmof shape $(N, N)$ where each element $(i, j)$ represents the dot product of vectors $i$ and $j$.Denominator values: Norm of each vector

denom = torch.sqrt(tmm.diagonal()).unsqueeze(0)The diagonal of the tensor

tmmcontains the dot products of each vector with itself. Taking the square root of these values gives the magnitude (or norm) of each vector. Theunsqueeze(0)function is used to add an extra dimension to the tensor, changing its shape from $(N,)$ to $(1, N)$.Denominator matrix

denom_mat = torch.mm(denom.T, denom)This line computes the outer product of the vector

denomwith itself, resulting in a matrixdenom_matof shape $(N, N)$. Each element $(i, j)$ of this matrix is the product of the magnitudes of vectors $i$ and $j$.NaN removal

return torch.nan_to_num(tmm / denom_mat)Finally, the cosine similarity between each pair of vectors is calculated by dividing the dot product matrix

tmmby the matrixdenom_mat. The division is element-wise, so each element $(i, j)$ of the resulting matrix represents the cosine similarity between vectors $i$ and $j$.torch.nan_to_numis used to replace any NaN values that might occur during the division with zeros.

The output is a symmetric matrix where the diagonal elements are all 1 (since the cosine similarity of a vector with itself is always 1), and the off-diagonal elements represent the cosine similarity between different pairs of vectors!

Benchmarking

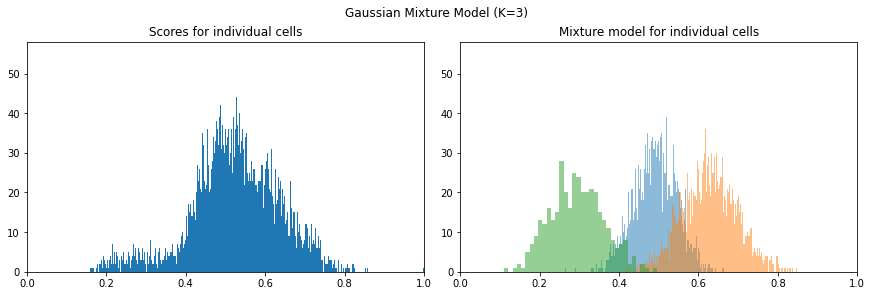

Versus naive loops, our approach completely outperforms them by several orders of magnitude. Versus sklearn.metrics.pairwise.cosine_similarity, our implementation is 10x faster, and versus a numpy implementation using the exact same logic, our PyTorch code is about 2-3x faster.

Results

1 | Result within 1e-8 of scipy loop: True |

Benchmark code

Here is the benchmark code if anybody wishes to reproduce it:

1 | import torch |